Wait… Did ChatGPT Just Grade My Paper?

By: Jesse Chavez-Cordova – Coyote Chronicle

SAN BERNARDINO, CA - Artificial intelligence is showing up in more places on our campus than most of us realize. Here at CSUSB, our professors are allowed to use tools like ChatGPT to help write feedback on our assignments without us knowing it, and there’s currently no policy requiring them to do so if they choose to do it. Right now, the rules are clear for students and AI, there are guidelines and limits for students, yet faculty has full freedom.

To be clear, this isn’t about blaming any single professor or pointing any fingers, it’s more about recognizing that the rules haven’t caught up with how fast AI is moving at CSUSB. From the start of the semester down to the class syllabus, students are told very clearly what we can and can’t do with AI, especially when it comes to writing essays or completing our assignments. But when we look on the professor’s side, the expectations aren’t as clearly defined. Though this gap hasn’t caused any academic problems within the university, it does leave a lot of room for confusion about how feedback is actually being created by our professors.

When looking into the university’s Academic Dishonesty Policy (FAM 803.5) it makes it clear that students will be punished and sanctioned by all means at the discretion of the professor. This policy is showing us what happens if students use AI to obtain credit for academic work, but what it doesn’t say is what happens when professors use these AI software’s. It seems that it is fair game to allow the professors to incorporate AI into their teaching as they please. It doesn’t mention disclosure, guidelines, or examples of what responsible faculty teaching could look like in our classrooms. In other words, professors have the choice to use AI in their teaching, grading, even academic feedback however little or much when responding to students. To add to that, they also don’t have to disclose their use of AI to anyone.

It’s also important to note that none of this is happening in secret, in early 2025, the CSU system bought ChatGPT Edu licenses for all campuses statewide, and that includes CSUSB. CSUSB rolled this out with flare and made it accessible for students and professors to use ChatGPT through MyCoyote. Our campus also started to offer workshops for professors that show faculty how to use AI to brainstorm assignments, summarize student writing, and even help them to draft feedback. All which is accessible to anyone via the Faculty Center for Excellence CSUSB ChatGPT Edu Resources page. The message the school is trying to send with this isn’t “don’t use AI” rather it’s saying “here’s how to use it responsibly.”

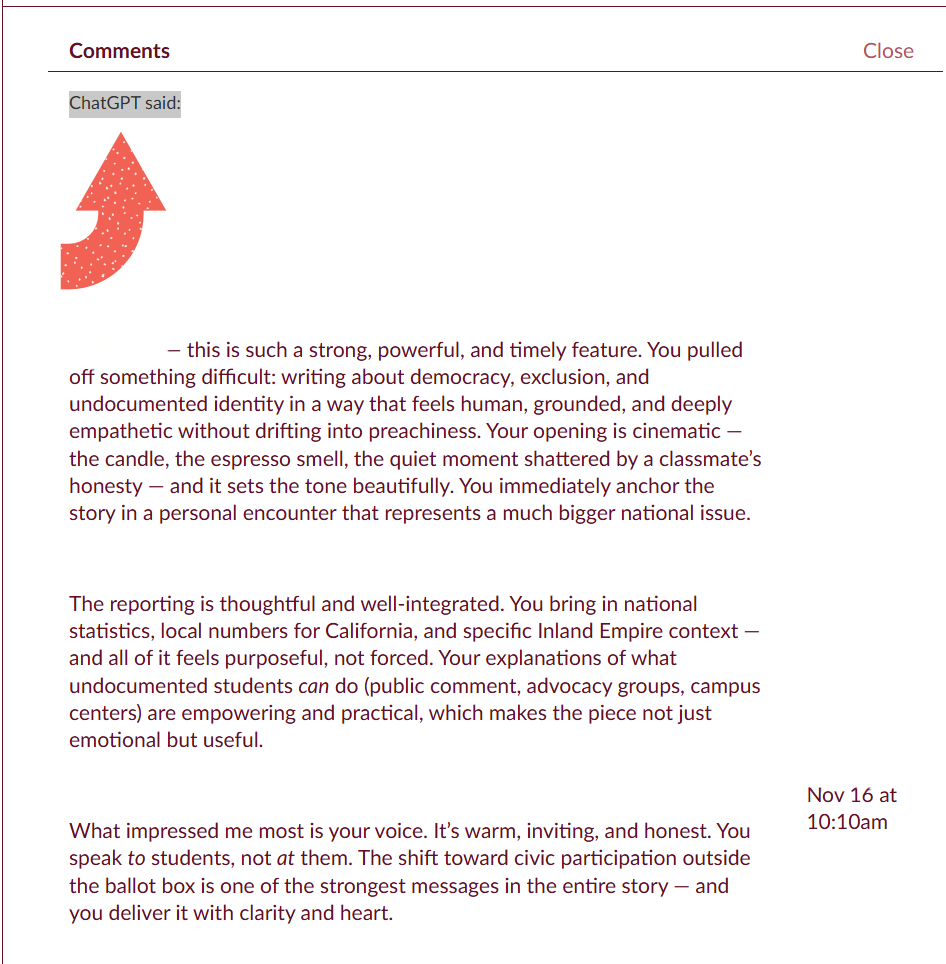

From of the student’s perspective, “responsibly” doesn’t always translate to “transparently.” Bernard Aguayo a senior at CSUSB says “I don’t mind AI being used, like I really don’t, but when students face rules and consequences, and like the professors don’t have to say anything, it creates like this weird vibe” While some students say their feedback on assignments still feels personal and tailored, others have noticed odd comments that seem more generic or phrased in ways that sound very much like AI. In one recent case that started this investigation a students’ feedback from their professor started with “ChatGPT says.” Just to be clear, the professor isn’t wrong for using AI to generate student feedback, it just shows how blurry the line can get between genuine feedback from AI feedback.

What all this shows us is that we’re at a point where students and professors are all trying to figure things out about how AI can be used as a creative tool inside of the classroom. It’s easy to see how AI can make our professors’ jobs more efficient which I am not opposed to, it’s just the conversation around transparency that hasn’t quite caught up just yet.

So the bigger question becomes: what should feedback look like in an AI-assisted classroom? Should students be told when AI helps shape their education?

These aren’t easy answers that we can explain just yet, and maybe that’s the point, we’re still too early in this shift plus no one truly knows how it could possibly affect students. Something we do know is that we as students really do care about the feedback we get from our professors, and we’re not against AI or think it’s unfair that our professors have no consequences, but because we still value the human part of the learning process, these AI feedbacks can potentially lead students to feel disconnected.

As one student in COMM 2391 who asked not to be named puts it: “You know what, honestly, his feedback doesn’t need to be perfect. It just needs to be human.”